5 Steps to Debug Anything

Root causing issues is a powerful skill that is necessary to level up. In this post, I am sharing my proven tips with you.

Debugging anything is an ambitious goal, so it is important to explain why I am writing this guide today.

Why I care about debugging anything

As an infrastructure engineer, I am responsible for my domain in our internal cloud. Our customers run microservices on our cloud, and sometimes we encounter complex and unprecedented issues that require intricate debugging. These issues can span multiple domains, so it is crucial to be able to investigate and analyze the underlying causes starting from the top-level symptoms. This makes it important to debug anything!

I do not advocate for debugging everything yourself

My philosophy with debugging anything is not about finding the root cause on your own for all issues. Instead, it is about being able to work towards finding the root cause with help when needed. Of course, if the problem is crucial and time-sensitive and someone else can debug it faster than you, they should take over.

Here are 5 steps that have worked for me and all my mentees to debug anything.

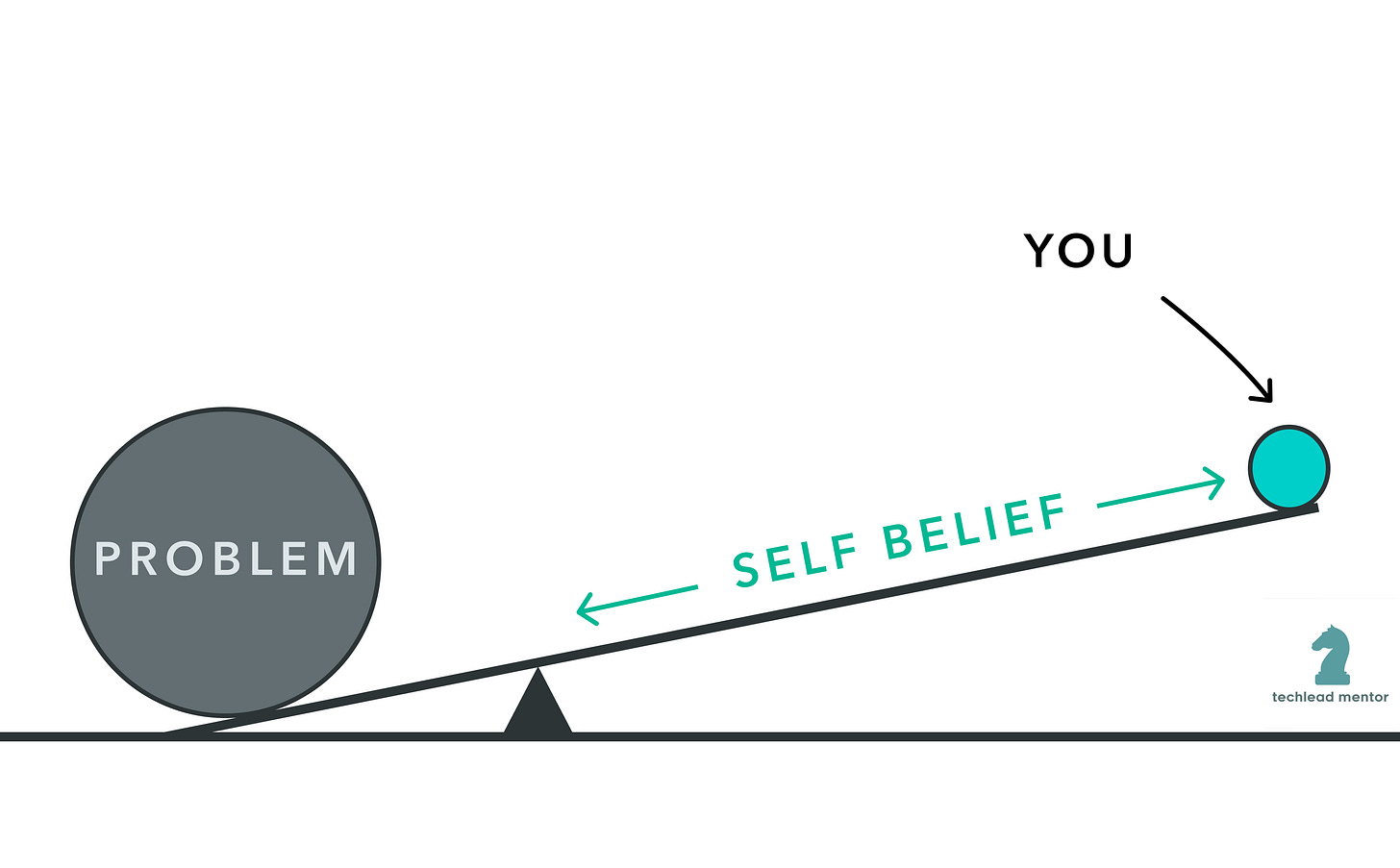

Step 0 - Believe you can

Believe in yourself and don't give up on investigations without trying. Many people think they have little context and are not experts, but it's important to be curious.

However, remember to not be over-confident. You should understand where your knowledge is lacking and be open to seeking help.

Step 1 - Learn the basics

If your foundation is weak, it will be extremely hard to be productive in your debugging sessions.

You should:

Know where to find the logs, process cores, alarms, metrics, dashboards, runbooks, and so on.

Have a reasonable grasp of how various components interact with each other.

Know how to search the codebase - not just your team's but also that of your dependencies.

Remember, it's okay if you don't know everything.

Step 2 - Validate your understanding

To make progress, you need to truly understand what the bug or issue is. I have seen people struggle when they cannot clearly explain the problem and end up clicking on different graphs and charts without making any progress. To avoid this, it is important to carefully analyze the situation and validate your understanding at each step.

Here is what works for me with an example situation (Imagine there is an active Service Overload alert)

What is a brief description of the problem?

"The alert for service overload fired for all of production or scoped to X regions."

Look for metrics that prove the problem. Even better, use the timeline view to understand when the issue actually started.

Look at the single "overload" metric that is over the threshold.

May be you find out that it started happening since 10 am today.

Can you explain the metrics that indicate there is a problem?

How is the "overload" metric calculated? Is it a derivation of different resources or something artificial?

Is the metric pointing at a real problem? Which customers are impacted?

Are the requests failing due to server overload? If so, what percentage?

What is the error? Are they timing out?

Are the requests taking longer to complete?

Are there more requests coming into the service?

Ensure you are not distracted by unimportant and unrelated problems.

Don't focus too much on outlier requests that took a long time even before this overload alert fired.

Don't focus on customers that are sending a handful of trivial requests.

This can be hard for someone inexperienced. So rely on your runbooks or previous investigations to highlight what problems are versus noise.

So my point here is to be curious and ask “why” at every step. When you try to understand beyond just the surface level concepts you will get closer to the root cause.

Step 3 - Scope down the problem

Don't get stuck trying to deal with a widespread problem as it is. To get to the actual root cause, you need to narrow down to something scoped and smaller.

Using the same example of service overload:

Can you look at a single instance?

Why are requests taking longer? Is it all types of requests or specific ones?

Is a downstream dependency taking longer?

Can you enable high fidelity logging to find out more about this single server?

If there are more requests coming in than expected, can you narrow down which customer is sending them?

Did other things happen around that time?

Was there a config change? New code deployment? etc.

Has your rate limiting or autoscaling stopped working?

Can you issue dummy requests to see if that reproduces the problem?

Don't get stuck trying to tackle the behemoth. Instead, break down the problem into smaller, manageable issues.

Step 4 - Ask for help

Great, you have validated the problem to a certain extent and have scoped it down. However, you are unable to make further progress. That is fine, and naturally, you should ask for help.

One of the hardest things to do is asking for help the "right" way during an intense situation. Try these:

Give a brief description of the problem with supported metrics.

Articulate your understanding of the situation and your working hypothesis.

Explain why you believe your hypothesis is likely true. Provide supporting metrics and logs.

Clearly state what you do not know and the gaps in proving your hypothesis.

Many people can explain what they understand but struggle to identify what they don't understand.

Your peers should not have to play a guessing game while trying to help you.

Eg: When you mention that you don't know how to find downstream latencies or that you are unaware of all downstream dependencies, they can provide concrete guidance.

Don't share information piece by piece.

It is important to provide a bird's eye view of the problem while asking for specific things.

The worst thing you can do is ask overly specific things that help you prove your hypothesis. Your peers may not have the context and cannot correct you if your original hypothesis is wrong. This will lead you in the wrong direction.

Treat your peers as a brainstorming resource rather than a Q&A bot.

Step 5 - Refine & Iterate

During the issue, repeat and iterate over the aforementioned steps. Also note:

If you are seeking help, don't just sit back. Observe how your peers are investigating the issue.

Help out with side investigations. Look at the same things your peers are investigating. That way, you can keep up with the investigation and even take over when it reaches a safe point.

If you need more information, please instrument and update the code.

After the issue is resolved, learn how to improve your processes.

Were there logs, metrics, and dashboards that you weren't aware of?

How did your peer debug it faster? Did they have a context that you didn't?

Learn and build up your knowledge base.

Over the last decade, I have investigated many issues, and some even felt like taking a shot in the dark. The key was to follow a systematic approach like this. It helped me build the muscle to truly “debug anything”.

Of course, these steps serve as a template and need to be converted into actionable items that are applicable to your specific software and problem. You also need to practice this a few times to see results. Lastly, this is a great strength for any engineer who wants to rapidly grow in their career. So invest in this essential skill today.

If there are more tips that worked for you then please leave them as a comment.

If you haven’t subscribed please do. Also follow me on LinkedIn where I post more frequently.

If you're seeking a mentor, feel free to reach out and learn more about how I can help you.

Interested in more reading? Check out my recommendations.

Things you shouldn't miss from the last week

How to burnout a software engineer, in 3 easy steps by Leonardo Creed

My secret for growing from engineer to CTO by Gregor Ojstersek

30 lessons I wish I could go back and tell my junior self by Caleb Mellas

Also hoping to cross 1k subscribers by this weekend!

Amazing post! Subscribed to your newsletter.

One of the biggest blockers is thinking you can't solve a problem.

It's not at your "level".

Instead, have the confidence you can solve any problems with enough time and effective strategy.

Great post!

Recently, I’ve been reading about a structured approach taught in “Why Programs Fail: A Guide to Systematic Debugging” by Andreas Zeller.

Zeller suggests debugging using the scientific method, where we analyze what we know, propose a hypothesis about where the bug might be (or where it might not be), then devise an experiment that tests our hypothesis. As we run more and more experiments, we gain information that helps us pinpoint where the bug originates from.

I’ve been trying this at work and I think it’s pretty effective!

For others, there is a free MIT reading that summarizes this approach:

https://web.mit.edu/6.031/www/sp22/classes/13-debugging/